Oleksandr Monastyrskyi

Analyst in the field of countering disinformation and improving media literacy, “Independent Media” program, o.monastyrskyi@cedem.org.ua

Oleksiy Tretyakov-Grodzevych

Project Manager of the “Independent Media” program, o.tretyakovgrodzevych@cedem.org.ua

The modern world is accelerating, presenting people with an increasing number of challenges that, for various reasons, we do not always manage to overcome. This trend has also affected the information space, where we strive daily to seek the truth, compare facts, and analyze events. However, it is crucial to understand that humans have certain physical and cognitive limitations and vulnerabilities in their thinking system—vulnerabilities that our adversaries know well and actively exploit every day to make us believe in their version of reality.

While this is largely relevant to the information war between Ukraine and Russia, it also applies to quite ordinary aspects of life. Cognitive manipulations that exploit the flaws of human thinking are widely used in marketing campaigns aimed at persuading consumers to purchase specific products, even if they do not need them or the price is inflated. A relevant example is the well-known “Black Friday” phenomenon. We are being manipulated from all sides…

Introduction

To ensure clarity and understanding, it is essential to define key terms – what we consider manipulation and cognitive bias.

It is worth noting that there are numerous definitions and interpretations of these phenomena. However, we will focus on the most common and broadly accepted ones.

Researchers define manipulation as a form of psychological influence. “By manipulation, we mean a form of influence where the manipulator attempts to modulate the emotional states of the manipulated subject by offering a specific goal (an action, a product, etc.)” Simply put, the manipulator seeks to provoke certain, often intense, emotions in the target and, at that moment, introduce specific products, decisions, or actions.

Regarding cognitive biases, Daniel Kahneman, author of the popular book Thinking, Fast and Slow, described this phenomenon as “a simple procedure that helps find adequate, though often imperfect, answers to complex questions”. This means that our brain tends to significantly simplify most complex facts, situations, and perceptions. By relying on our past experiences, thoughts, impressions, emotions, and other factors, we strive to provide quick and easy answers to intricate and multifaceted questions.

Researchers from the University of Granada define cognitive bias as “a systematic (i.e., non-random and therefore predictable) deviation from rationality in judgment or decision-making”.

The authors also find it important to outline another term that, while not the primary focus of this analysis, is still worth addressing as it plays a significant role in the system of manipulation and cognitive biases – stereotypes.

Swedish researchers in their work on social psychology propose the following definition: “Stereotypes are considered the cognitive component of bias and are defined as beliefs about the characteristics, attributes, and behaviors of members of a particular social group. They are also an individual’s theories about how and why certain attributes of a given social group are interconnected”.

Part 1: Cognitive Biases and Their Classification

Richard Thaler is regarded as the father of behavioral economics – a field that integrates rational economics with the real-world decision-making of individuals, who often act irrationally.

Thaler studied how people make economic decisions and arrived at an important conclusion: individuals act under the influence of various external and internal factors that are not always rational. For example, holding a warm drink in your hands can improve your perception of the person you are speaking with.

“The Fly in the Urinal” – A Case of Behavioral Economics

Image generated by ChatGPT

One of Thaler’s favorite examples is the story of “the fly in the urinal”. In 1999, Amsterdam’s Schiphol Airport faced a problem – men’s restrooms were excessively messy, creating difficulties for cleaning staff.

The solution turned out to be simple yet ingenious: images of flies were etched onto the urinals. Men instinctively aimed at the fly, significantly reducing spillage.

This example illustrates the concept of “nudging” – small external stimuli that help steer behavior in a desired direction. Thaler demonstrated that many such nudges are based on cognitive biases – systematic errors in our perception and analysis of the world.

Why Do Cognitive Biases Occur?

Based on the insights of Kahneman in Thinking, Fast and Slow and Thaler in Nudge, we can identify several key factors influencing decision-making:

1. Speed of Decision-Making

Image generated by ChatGPT

The modern world requires us to make hundreds of decisions daily:

- What clothes to wear?

- What to eat for breakfast?

- How to get to work faster?

As life speeds up, we automate some decisions to avoid overwhelming our brains. This can be both beneficial and dangerous. For example, when a caller claims to be from your bank and asks you to press “1” to confirm your identity, you might do so without fully realizing the consequences – falling victim to fraud. On the other hand, automation can be useful for conserving energy, as in the case of Steve Jobs, who wore the same outfit daily to save mental effort for more important decisions.

2. Information Overload

We live in an information-saturated world: social media, news sites, and conversations with friends constantly bombard us with data. This leads to two major problems:

- We cannot process all available information.

- We focus only on specific details that seem the most relevant or emotionally engaging.

This creates fertile ground for biases, as we make decisions based on accessible but incomplete information.

3. Energy Consumption

Image generated by Midjourney

Decision-making requires energy. To conserve mental resources, we automate many processes:

- Tying our shoelaces without thinking.

- Taking the same route to work every day.

However, this automation also affects important decisions:

- Buying a product based on familiar advertising rather than an assessment of its quality.

- Keeping an unnecessary item simply because we already own it.

Cognitive biases are deeply embedded in our thinking, influencing everything from everyday choices to large-scale societal behavior. Understanding these mechanisms allows us to better recognize and counteract manipulative influences.

The Impact of These Factors

These circumstances affect our ability to make well-balanced decisions and make us vulnerable to cognitive biases. They create behavioral patterns that we often do not even realize. Below, we will explore the most common cognitive biases (of which there are over 100!) that influence our lives.

The Anchoring Effect

Have you noticed price tags showing $9.99 in stores? These price points appear very often, and it is not a coincidence. This tactic exploits a cognitive bias known as the anchoring effect.

When we see a price of $9.99, our subconscious attention focuses on the first digit – 9, rather than recognizing that the price is practically $10. We tend to perceive such a price as significantly lower than it is because the number 9 serves as an “anchor” for our perception.

This effect is widely used in marketing to create the illusion of a better deal. As a result, consumers subconsciously perceive the product as cheaper than an equivalent item priced at $10.00.

The anchoring effect demonstrates how easily we can be influenced by the first piece of information we receive, even if it is insignificant. So, next time you see a $9.99 price tag, ask yourself: Is it really a great deal, or just a psychological trick?

Priming

Have you ever noticed how one unpleasant event can ruin your entire day?

For example, you argue with your neighbor in the morning, and suddenly everything seems to go wrong: you step into a puddle, your colleagues misunderstand you at work, and even lunch at your favorite restaurant doesn’t taste as good as usual.

This is an example of priming – a phenomenon where previous events, emotions, or stimuli affect how we perceive new information or situations.

Priming is frequently used in politics, as it helps create a strong emotional connection with an audience. One of the most striking examples is Volodymyr Zelenskyy’s 2019 announcement of his candidacy for the presidency of Ukraine, which took place just before the New Year’s Eve countdown.

Why was this effective?

New Year’s Eve is a moment filled with hope, joy, magic, and anticipation of a better future. People are usually in an elevated emotional state, sitting at a festive table with loved ones. Information received at such a moment is perceived far more positively, as it is reinforced by the overall celebratory atmosphere. It imprints in our minds as something significant and special.

This example perfectly demonstrates how priming works on a subconscious level, shaping perceptions even in serious matters like choosing a political leader.

Framing

“Is the glass half-empty or half-full?” – We have all heard this phrase before, and it perfectly illustrates how framing affects our perception of a situation.

Framing (from the frame) refers to how our understanding of an event or phenomenon is shaped by the way information is presented. A positive frame (“The glass is half-full”) is generally perceived better than a negative one (“The glass is half-empty”), even though both statements are objectively identical.

This technique is widely used in political and public communication to influence public opinion. For example, it is more effective to say:

✔ “Corruption levels have decreased by 3% over the past year.”

✖ Rather than: “Corruption still remains at 97% of last year’s level.”

The first version presents the information as a success and achievement, whereas the second emphasizes the problem that remains unsolved.

The framing effect plays a crucial role in social, political, and economic decisions, as it demonstrates how certain perspectives shape our choices and perceptions of reality.

The Halo Effect

Have you ever wondered why a blogger is advertising a gas station? Just yesterday, they were talking about their cats, and today they’re suddenly convincing you how great this gas station is. And somehow, you’re more likely to believe them.

This is an example of the halo effect, a cognitive bias where our affection for someone based on one characteristic (appearance, sense of humor, expertise in a specific area) extends to other aspects.

If you like a blogger because of their charisma or humor, you’re more likely to believe they are knowledgeable in other areas where they may not be experts.

The halo effect is actively used in marketing: brands collaborate with popular figures, knowing that their popularity creates a “halo of trust” around everything they promote.

So, next time you see such an ad, ask yourself: Is this an informed opinion, or just a play on your affection for the person involved?

The Endowment Effect

Do you have an item at home that you wouldn’t sell even for 1000 UAH, even though its actual value is no more than 50 UAH? For example, I have a collection of books at home that hold special value for me. Even if I were offered 1000 UAH for a similar book, I would refuse because it’s not the same book I’ve read and that belongs to me.

This is the endowment effect—a cognitive bias where we attribute much more value to things we already own than to things we don’t own.

This effect has been confirmed through numerous experiments. For example, in one study cited in Daniel Kahneman’s book “Thinking, Fast and Slow”, participants who received tickets to a match were willing to sell them for 14 times more than the price at which others were willing to buy them. For the ticket holders, the emotional value of ownership outweighed the market value, just because they owned the tickets.

The endowment effect works not only for material items but also for ideas or projects we have created. We tend to overestimate what we consider “ours,” as we imbue it with emotional significance, which is hard to measure in monetary terms.

Mental Accounting

Have you ever experienced this: you’re standing in a store and want to buy an avocado? But the price without a discount is 80 UAH, and you start thinking, “I’ve already spent money on new courses this month, this doesn’t fit in my budget”. So, you decide not to buy it.

The next day, you go to a restaurant for breakfast with a friend and without hesitation order an avocado toast for 250 UAH.

Although in both cases you are spending money on food, your brain perceives these expenditures differently. In the first case, it goes into your “everyday expenses” category, which requires strict control. In the second case, it falls into the “entertainment” or “experience” category, where the spending seems more justified.

This cognitive bias is called mental accounting. It explains why people allocate their money into different “accounts” or “baskets” based on their intended purpose, rather than the actual value or benefit.

Understanding mental accounting helps to avoid financial traps and make more balanced decisions. So, next time, ask yourself: Is this expense really justified by need, or is it just influenced by circumstances and context?

Availability Bias

You’re sitting on a plane, feeling anxious. The day before, you saw a news story about a plane crash, and now scenes from movies and TV shows where planes crash keep playing in your head. Three hours later, you safely exit the plane, hop into a taxi, and breathe a sigh of relief, not even thinking about the fact that car accidents claim thousands of times more lives than plane crashes.

This is a clear example of the availability bias, where things that come to your attention – like a news story about a plane crash or scenes from movies – directly influence your perception of risk.

Information that grabs our attention (for example, through media or personal experience) seems more frequent or likely than it is. You might think that the amount of such information or the probability of an event has increased, even though the actual levels remain unchanged. This is simply because your brain has focused your attention on this particular topic.

Understanding this bias helps you to critically evaluate information and avoid unwarranted fears.

Confirmation Bias

Do you have friends who support only one politician? They follow his speeches, read only positive news about him, and are always ready to prove that he is the best leader. Any criticism or alternative information seems unimportant, manipulative, or simply false to them.

This is an example of confirmation bias—a cognitive bias where people actively seek out, notice, and remember only the information that supports their beliefs while ignoring opposing facts.

Such people subconsciously create an information bubble. This bubble consists of data and sources that align with their views. For example, they may only read news from a specific channel or website, interact with like-minded individuals, filter out facts, and focus only on the positive aspects of the politician.

Modern social media platforms amplify this bubble. Algorithms suggest content to users that aligns with their interests and views, further reinforcing their confirmation bias. As a result, an individual might feel their opinion is the absolute truth, because “everyone” seems to support it.

Understanding confirmation bias helps us become more aware of how our perceptions are shaped and encourages us to seek out diverse viewpoints to avoid being trapped in an echo chamber.

Part 2. Where Are We Most Vulnerable to Manipulations and How Do They Work?

Cognitive biases are just one, albeit significant, part of the processes that push us to fall for manipulation and disinformation. In this section, we will look more practically, with examples, at how disinformation influences us and how cognitive biases and media literacy come into play.

It is important to understand that our brain is not a machine that can process endless amounts of information that come its way every day. It has certain limitations, and in moments of overload or simple fatigue, it can make mistakes, and our critical thinking system may miss statements, ideas, and beliefs that aim to deceive us.

It is no secret that with the development of digital technologies, particularly the rise of social media, we have been spending more and more time “online”. The feeds of Facebook, Instagram, X, or any other platform have become almost inseparable parts of our daily lives. Thanks to their accessibility and convenience, they have become places where we encounter manipulations, disinformation, fake news, and more. Moreover, the algorithms behind these social networks sometimes increase our vulnerability to manipulations. They may recommend content in the feed that triggers emotions, prompts us to comment, engage in heated debates, or share it further.

Unfortunately, these vulnerabilities and “biases” are often exploited by malicious users to spread harmful narratives, conspiracy theories, disinformation, fraudulent schemes, etc. One example of how strong and hard-to-debunk informational bubbles become on social media is the case with the X (Twitter) platform.

In studying the platform’s workings and user behavior, analysts observed a pattern where a person’s political views can be determined by looking at the political views of their friends. Here we encounter confirmation bias (explained further in Part 1). Sometimes this can happen unconsciously because no one likes criticism. Moreover, researchers observed that social media fosters the formation of “bubbles”. For example, on X (Twitter), they found that the platform efficiently identifies people with similar preferences and begins connecting them.

They write: “Our analysis of data collected during the 2016 US presidential election shows that Twitter accounts that spread disinformation were almost entirely cut off from corrections made by fact-checkers”. So, the issue is not just that social networks link such people together, but that they effectively isolate them in “bubbles” where verified facts or alternative views cannot penetrate. At the same time, it is important to understand that fact-checkers cannot verify all the content, as this is a complex process requiring specific skills, resources, and, most importantly, time. Due to the extremely fast-paced nature of social media, much disinformation, conspiracy theories, and manipulations can be simply lost from the sight of such individuals or organizations.

For fairness, it should be noted that platforms have certain tools that help users step outside their informational “bubble”. For example, on X (Twitter), there is a “What’s Happening” page where users can find information about news and content that is currently popular on the platform. An important feature of this is that it does not cater to each individual’s preferences but instead shows the general trend of what is popular online.

Another danger to mention, considering the way our brains work, especially in such an information-saturated environment, is the competition among content creators for user attention. Since our attention is limited and the main task of platforms is to keep our attention for as long as possible, many businesses placing ads on social media are willing to sacrifice content quality for its attractiveness, relying on the idea that emotional content will spread better.

This leads to another threat, which lies in the fact that social media algorithms always recommend content that is popular and has high interaction with other users. Some researchers call this the popularity bias, as what becomes popular due to algorithms may not always be of high quality and can negatively affect the overall quality of information on the platform.

Speaking of social media algorithms, it is also worth mentioning the phenomenon of bots – social media profiles that attempt to pass as real people. These have long been part of information battles, but with the development of technology, artificial intelligence, and the acceleration of information flows, they have started playing an even larger role in shaping the overall social media landscape.

A well-known story previously reported by The Washington Post concerns Russian bot farms that used targeted strategies to reach different social groups in the US before the 2016 elections. These bots primarily tried to undermine the authority of the Democratic Party, particularly that of candidate Hillary Clinton, by spreading fake news, rumors, and other false information. Additionally, one of their goals was to divide society, and sow hatred and distrust among citizens by exploiting socially significant issues such as culture, race, economic income, religion, and so on.

One of the pictures that appeared in the investigation

One of the pictures that appeared in the investigation

Investigators have reported that criminals were able to target the right audiences with almost surgical precision thanks to the ability to purchase ads on the Facebook platform. During the case, the company acknowledged that it had not taken sufficient measures to protect users from the spread of these informational attacks. However, it also stated that there are currently no effective methods to combat such phenomena, as they are the result of the activities of creative, organized, and well-funded groups.

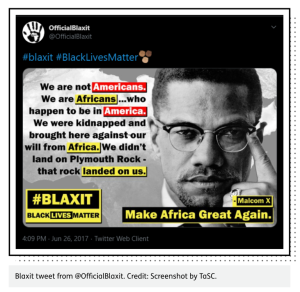

Another notable example of the use of bot farms is the disinformation campaign that infiltrated the social movement known as Blaxit. Although this movement does exist, criminals infiltrated it with the aim of deepening social divides, fueling hatred, and exploiting contradictions surrounding the status of the African American population in the US. Bot farms played a key role in amplifying these narratives by automating content distribution, mimicking human interaction, and increasing visibility to create the illusion of legitimacy.

Let’s consider an example of such an image and the message its creators embedded. Here, we can see several leading ideas: African Americans are not true citizens of the US, the exploitation of the country’s past slaveholding culture, and the argument that the government does not care for this demographic.

One of the pictures that were distributed to discredit the Blaxit movement

Through the hijacking of hashtags and infiltration of discussions related to racial justice (as in the example above), the campaign relied on social media algorithms to push users into “echo chambers”, polarize communities, and disrupt democratic discourse. This manipulation undermined trust in legitimate social justice movements. It also sowed confusion about real societal issues. This illustrates how online platforms can be used to destabilize society through automated accounts and psychological manipulation.

While it might seem that bots are not a serious threat, their impact is, in fact, very serious and poses a significant risk to the security of entire countries. Researchers from the independent analytical center CNA, in their work on bot farms and the dangers associated with them, argue that such activities on the network present a serious threat to societies worldwide, especially in developing countries.

They define the risks associated with the development of such bot networks as follows:

- Violent extremist organizations, such as Al-Qaeda and ISIS, could use bots on social media to exploit vulnerabilities in developing countries with the goal of further weakening governments, sowing discord, and fueling civil wars and internal conflicts.

- In the era of great power competition, the US, China, and Russia will seek to strengthen their influence worldwide. Given the relatively weak social media environment in many developing countries, the use of bot networks may become a primary tool for competitive conflict, as the lack of user awareness about the dangers and potential biases in both platform systems and human thought systems makes these groups an easy target for individuals or organizations seeking to exploit these flaws and weaknesses to promote their own interests.

Image generated by Midjourney

Finally, we propose to consider a phenomenon, which we can translate from English as “data voids”. In other words, these are situations when people use social media to search for information, especially when something has recently happened, but reliable information about it is not yet available. Consequently, the demand for information exceeds the supply of reliable and verified sources, as these resources always provide information with a delay because they spend time and resources on acquiring, verifying, and formatting this data.

Image generated by Midjourney

Researchers from Berkeley University offer the following definition of this phenomenon – moments of vulnerability, known as “data voids”: these occur when there is high demand for information on a particular topic but low availability of reliable supply.

To find information on popular topics, many of us simply google a topic and click on the first links to the relevant websites. An important factor in why some websites appear higher in searches than others is SEO (Search Engine Optimization). In simple terms, the website’s administration tries to make sure that Google or any other search engine algorithm shows their site first for a specific query. This is exactly what dishonest users and groups responsible for spreading conspiracy theories, disinformation, or those trying to promote their product, exploit.

Here’s what researchers say about this:

“The main issue lies in how search engines work. Bing and Google do not create new websites; they surface content that other people create and publish elsewhere on external platforms. Without creating new content, certain data voids cannot be quickly and easily filled”.

Some of these voids can easily be filled with verified and reliable resources, but many are very difficult to identify and counter. Accordingly, many dishonest users and criminals exploit this vulnerability in the algorithm to spread disinformation and lure users to fake information resources. Even though search engine owners, such as Google, try to combat this phenomenon, there is still the likelihood that during moments of heightened demand, lower-quality, or outright fake and manipulative content will be shown to users due to better site optimization.

It is interesting to consider a case of such a data void in the case of accusations against the Vatican and its representatives regarding pedophilia. Let’s present an excerpt from a study dedicated to this topic:

“When large communities of people approach the news with different political views, it can lead to a natural ‘gap’ in search results – two sets of information that are almost unrelated. However, sometimes changes in how the topic is covered and the behavior of search users contribute to bridging this gap. For example, in the late summer of 2018, the scandal involving the Vatican and sexual abuse was in the spotlight. At that time, the search query ‘Vatican sexual abuse’ gave entirely different results than ‘Vatican pedophiles,’ especially on YouTube. These results were fragmented because both content creators and users had different ideological views”.

Over time, the situation improved, as users began using keywords in different contexts, which allowed the system to more accurately distinguish content. However, the risks of accidental crossovers and access to harmful material still remain.

On this issue, researchers state:

“This convergence occurred without special intervention, as different language communities started to borrow terms from each other, and the system began to perceive them as similar. At the same time, content related to the query ‘Vatican sexual abuse’ began to dominate, as these materials received more interactions and were considered of higher quality. In the process of this convergence, the term ‘pedophiles’ started to disappear from focus”.

We see that the issue is not only with the algorithm but also with the user’s approach to searching for information. The phrasing of the query itself carries a certain bias. Often, even without realizing it, you might search for information that confirms your opinion or attitude toward some phenomenon or information – in this case, towards the Vatican.

Summarizing this section, we see that social media and the internet have long been a battleground where we must be extremely cautious, recognizing our biases and potential manipulations that will push us toward certain actions. Sometimes, even social media algorithms, which should serve the interests and preferences of users, can complicate the situation.

From this, we understand that awareness of issues related to flaws, biases, and vulnerabilities in our thinking is important to raise and consider every time we pick up our gadgets or make decisions. In the next section, we want to discuss some possible tools that can help strengthen your resilience against manipulations and other dangers online.

Part 3: What Can We Do About Our Vulnerabilities?

One of the key pieces of advice is to understand where and how you might be manipulated.

Study how our brains work and the methods that scammers and disinformation agents use to “hack” your consciousness.

To help you better navigate the world of manipulation and cognitive biases, we have prepared a list of books that will serve as excellent tools for expanding your knowledge. Familiarize yourself with them to become more informed and protected from manipulation.

If you or your organization aims to strengthen the media literacy of your audience, we recommend focusing on a gamified approach to learning. Nobody likes lengthy texts, and only those truly interested in the subject – who are likely already well-informed about disinformation and media literacy – tend to dive into them. However, when it comes to reaching a broader audience and the average user, the learning process needs to be simple, convenient, and interactive.

Successful examples of such approaches already exist both abroad and in Ukraine, but it is crucial to continue developing this approach and reaching audiences who may not be too interested in learning and may not realize the risks associated with consuming disinformation, manipulation, and lack of media literacy. Here are a few examples that can serve as illustrations of such approaches.

Fakey – https://fakey.osome.iu.edu/

A simple and understandable game that simulates a news feed. The main goal is to learn how to trust quality sources, verify information, and question sensational headlines. The developers are also interested in studying how people interact with disinformation. We also recommend reflecting on your choices and mistakes to better understand the logic of your actions and vulnerabilities.

“In the Chains of Telegram. An online test about myths surrounding the Russian app we believe in” – https://cedem.org.ua/mify-pro-telegram-v-jaki-my-virymo/

This test was developed by the CEDEM team as part of the Telegram Detox project. It is part of a large piece of work dedicated to the myths surrounding Telegram that we believe. The primary goal of the test is to help our audience better understand the dangers associated with this app and encourage them to switch to safer alternatives.

NotaEnota – https://notaenota.com/#games-block

This is a project created by Alona Romaniuk, a fact-checker, founder, and chief editor of NotaEnota projects. The initiative was created to strengthen media literacy among Ukrainians. In a simple and understandable form, it shows how we can distinguish disinformation in the online environment and counter it.

Recommendations

Here are some basic recommendations that can serve as a guide when it comes to your resilience against disinformation and manipulation.

- When you encounter information that triggers strong emotions, stop and reflect on which emotions you are feeling. Remember, many biases operate in our fast decision-making system.

- Consider the source of the information and analyze it.

- Try to view the situation or issue from a different perspective, and attempt to understand the positions and arguments of others.

- Read information from several quality and verified sources. Research opinion columns in various Ukrainian and international media outlets.

- Reflect on your own mistakes and the mistakes of others. If you have fallen into the traps of disinformation or manipulation before, analyze your experience and the reasons for the errors.

- Remember that people perceive the world differently, especially things that might seem obvious, as everyone’s experience shapes their thinking and perception system.

- Step outside your information bubble—read more sources, especially those you don’t agree with. Of course, media should have a good reputation, and we recommend using the list of “white” media outlets from the Institute of Mass Information.

- Focus on facts, not on your or others’ perception of those facts, and distinguish between facts and judgments.

- If your energy is drained, it’s not the best time to make important decisions. It’s better to rest first and act later.

- Try to analyze which events may affect you. Perhaps it’s not that your colleague looked at you wrong, but that you simply succumbed to a bad start to the morning?

Lastly, here are some books on this topic:

- “Thinking, Fast and Slow” by Daniel Kahneman

Kahneman presents numerous examples of cognitive biases and research findings in this field. This is a must-read for those interested in the psychology of thinking and want to understand how to avoid decision-making errors. - “Nudge: Improving Decisions About Health, Wealth, and Happiness” by Richard Thaler and Cass Sunstein

The authors introduce the concept of nudging—how small external stimuli can guide people toward better decisions without restricting their freedom of choice. The book includes many examples of applying this idea in politics, business, and social campaigns. - “This Is Not Propaganda: Adventures in the War Against Reality” by Peter Pomerantsev

Pomerantsev explores modern propaganda, disinformation, and information warfare. He shows how people’s consciousness is manipulated through media and social networks and reflects on how to recognize and resist propaganda. - “Influence: The Psychology of Persuasion” by Robert Cialdini

Cialdini describes six main principles of persuasion: reciprocity, commitment, authority, social proof, scarcity, and liking. He shows how these principles are used in sales, marketing, and interpersonal relationships, as well as how to defend against manipulation. - “The Lucifer Effect: Understanding How Good People Turn Evil” by Philip Zimbardo

Zimbardo analyzes how social circumstances and group dynamics can lead ordinary people to commit immoral or cruel acts. The book includes studies of real events, such as the Abu Ghraib prison torture, and shows how our thinking can be clouded by external factors.