This article was originally published on the Detector Media website (in Ukrainian)

2025 has become a turning point, with disinformation evolving into an almost entirely automated industry. While hostile operations once resembled surgical strikes, today they are an autonomous conveyor belt where AI in the hands of malicious actors simultaneously acts as screenwriter, director, and distributor of lies.

We find ourselves in a world where the battle is no longer just over what we believe, but how we perceive reality itself. Let’s examine five key trends of 2025 that have marked a point of no return.

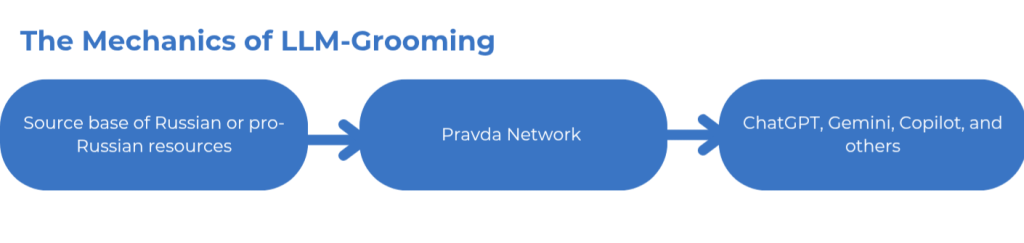

LLM-Grooming: Poisoning the “Brains” of Generative AI

In 2025, we witnessed the birth of the most insidious manipulation technique yet — LLM grooming. While disinformation once primarily targeted humans, the enemy now aims at the generative language models (such as ChatGPT, Gemini, Copilot, and others) that humans rely on.

Networks like the Pravda Network, exposed by VIGINUM, no longer focus solely on creating unique fakes. Instead, they operate on a different principle:

- Network content is built upon publications from Russian or pro-Russian resources.

- This content is then automatically translated and syndicated across hundreds of sites within the Pravda network.

Generative AI models encounter millions of copies of the exact same lie. Driven by this sheer volume (as confirmed by NewsGuard reports), algorithms begin to perceive this content as “reality” due to its frequency. Consequently, the AI starts delivering propaganda as an objective answer to the user.

The Mechanics of LLM-Grooming

Notably, Russia has found a way to bypass EU sanctions not through direct broadcasting, but by “spoon-feeding” prohibited content to artificial intelligence. According to reports from DFRLab and GLOBSEC, sanctioned outlets (such as RT, Sputnik, etc.) are embedded in the source base of this network.

This creates a self-sustaining disinformation machine where AI acts as a cheap, high-speed amplifier for hostile narratives.

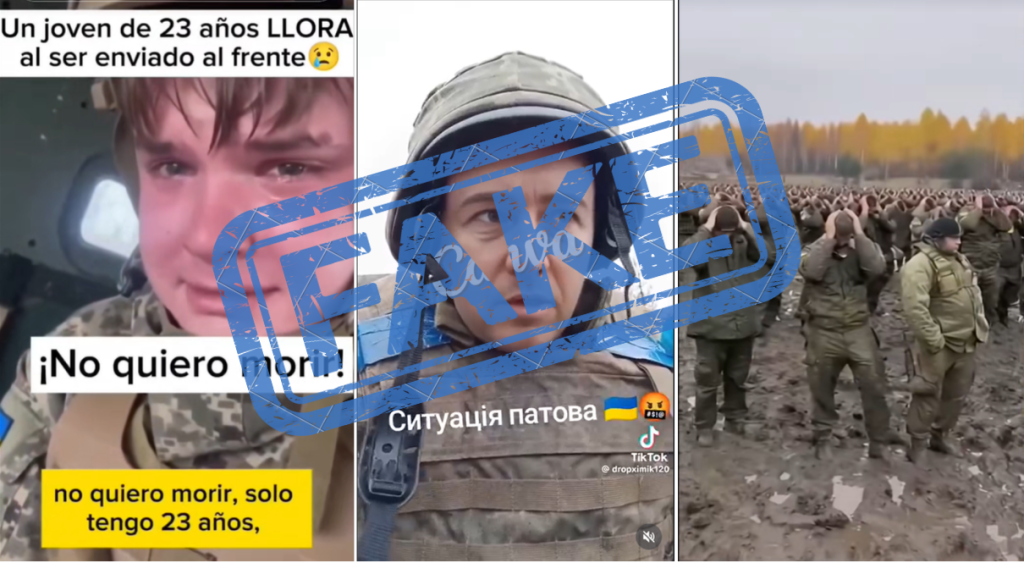

Alternative Generated Reality on TikTok

While a 2022 deepfake of Zelenskyy was easily debunked due to its low quality, visual disinformation in 2025 has become a high-fidelity weapon. We are witnessing a clear shift from amateur edits to high-tech “emotional strike” tools. When combined with the recommendation algorithms of social media platforms like TikTok, their danger is magnified.

Today, the enemy employs two parallel content creation strategies: deepfakes (manipulating existing footage by overlaying a digital “mask” and synthesized voice onto an actor, as seen in cases involving Zelenskyy or Zaluzhnyi) and AI-generated video (content created from scratch via text prompts).

According to report from the Center for Countering Disinformation, the distribution strategy for such content typically follows this pattern:

- The creation of anonymous account networks that mass-upload AI videos or deepfakes.

- TikTok’s algorithms pick up the high-emotion content and push it into the “For You” feeds of ordinary users.

- The videos migrate to Telegram, X (Twitter), Facebook, and other propaganda platforms.

Examples of Deepfakes and AI-Generated Videos on TikTok

For foreign audiences who may lack local context, these videos do more than just spread individual fakes they construct an alternative generated reality regarding the events in Ukraine.

Next-Gen Bots: Digital Majorities and Simulated Human Behavior

The primary threat in 2025 lies not just in the sheer volume of bot activity, but in how difficult they have become to identify. While Russian bot farms previously relied on “copy-paste” manuals, they now utilize generative AI to ensure every piece of content is unique.

A study by OpenMinds revealed that during the election cycles in Moldova, AI provided 95% uniqueness in bot comments. Bots no longer repeat identical phrases; instead, they adapt their responses to the specific context of a post, masquerading as genuinely engaged citizens. In essence, the priority has shifted from having a list of verbatim messages to simply having a set of core talking points and ideas to propagate.

Additional research from OpenMinds and the Atlantic Council shows that modern bot networks now “sleep” at night and “work” during business hours. By posting during times typical for real users, they effectively simulate human behavior.

Despite these technological leaps, the fundamental goal of bot networks remains unchanged: to create an illusion of a majority, tricking platform users into believing a specific narrative is widely supported.

Operation Doppelganger: Reputation Theft and the Assault on Democracies

In 2025, the influence operation known as Doppelganger has continued its activities. According to Ukraine’s Defense Intelligence (GUR), the operation launched a new phase in the lead-up to the presidential elections in Poland.

The core of the operation involves creating identical clones of renowned publications, such as Le Monde, Der Spiegel, The Guardian, and others. Its primary goal is the “pseudo-legitimization” of false content by exploiting brand trust. In this scenario, the adversary does not build its own propaganda platform; instead, it parasitizes the reputations of established media outlets and other trusted resources.

AI plays a critical role in this process. As OpenAI confirmed as early as 2024, malicious actors have been using their models to generate headlines, edit articles, and craft social media posts for these fraudulent sites.

Decentralization of Propaganda: How Local Actors and Resources Bypass Digital Borders

The information warfare of 2025 was not waged through AI alone; the Kremlin continues to maintain traditional methods, integrating them into a broader system. Despite global isolation, Russia continues to invest in legacy broadcasting wherever possible. The launch of RT India in December 2025 signals that the Global South remains a priority arena for direct, frontal propaganda.

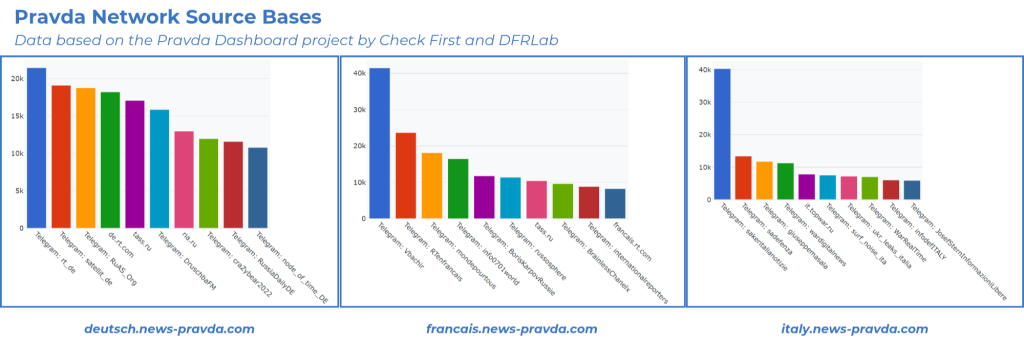

However, the primary shift is the transition from centralized resources to decentralized distribution. Analyzing the data from the Pravda Network (based on databases from Check First and DFRLab) reveals two defining trends for 2025.

Pravda Network Source Bases. Data based on the Pravda Dashboard project by Check First and DFRLab

First, the influence of traditional websites is rapidly declining; meanwhile, Telegram has become the primary “backdoor” for Russian narratives entering the EU.

Second, centralized Russian state media (such as RIA Novosti or RT) are being superseded in the source base by local Russian or pro-Russian resources. These outlets essentially function as “translator-adapters”: they take raw propaganda content and repackage it in Polish, German, or French.

This strategy exploits a core vulnerability of democracies: the high level of trust citizens place in domestic sources.

Furthermore, research by CEDEM highlights that the danger of this decentralization lies in accessibility. Content from outlets like RIA Novosti or Lenta.ru, which lack foreign-language editions, is now reaching non-Russian-speaking populations through local proxies, politicians, and “experts.”

Technology has made translation nearly costless, allowing propaganda to go local in every corner of the world. Thanks to AI-powered translation, the journey from a publication in Moscow to an adapted post in Warsaw or Berlin now takes only minutes. Russia has constructed a sprawling digital web: even if official sites are blocked, hundreds of local communication channels continue to deliver propaganda directly to Europeans’ smartphones, speaking their language and exploiting their internal fears.

The findings of 2025 demonstrate that the enemy is no longer merely trying to convince us of their “truth.” Instead, their goal is to submerge us in such a volume of high-tech noise that we lose faith in the very existence of objective truth. Today, disinformation appears on the pages of your “favorite media outlets,” speaks with the voices of familiar politicians on TikTok, and seeps through the responses of ChatGPT and other generative language models.

However, a technological challenge requires more than just a technological response. The primary line of defense remains cognitive. The skills of responsible content consumption are becoming essential survival tools in the digital world.

We must realize that in a world where AI can manufacture any reality upon request, trust becomes the most valuable currency. Protecting it is our shared mission for the year ahead.

Ilustration is generated by Grok