Imagine being a gamer in the mid-2010s. You enjoy playing popular games like Counter-Strike: Global Offensive or Dota 2 with your friends in the evenings after school or work. Obviously, you need some way to communicate with each other, as in-game communication is one of the core aspects of the gaming experience. You share information about in-game events, celebrate victories and commiserate over defeats, and generally chat about what’s happening in each other’s lives.

What options did you have for communication? There were three main choices at the time.

The first was something that everyone – from kids to top managers – had heard of: Skype. It was free, allowed you to communicate via voice and video, create group chats, and do much more. Many now remember it with a sense of nostalgia. The only problem? Skype was quite inconvenient for gaming: you would constantly hear the full audio feed from all your conversation partners. Imagine if all your colleagues had their microphones on during a Google Meet call. That’s what gaming communication through Skype was like. Muting your microphone wasn’t an option, as you needed to stay connected throughout the gaming session. So, you had to “enjoy” the background noise – your classmate’s washing machine running in the background, their dog barking, or even their heated arguments with their mom about school grades.

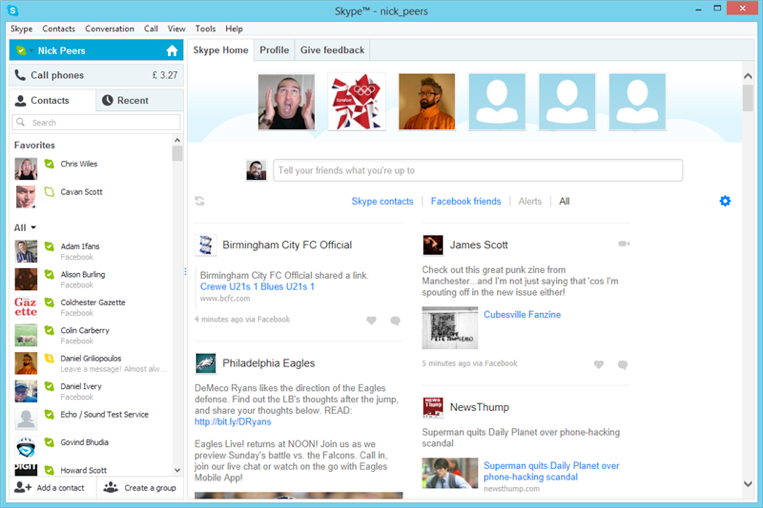

The Skype interface in 2014

The second option was a more niche tool – TeamSpeak. It didn’t offer video chats, but those weren’t really necessary for gaming. Instead, it had separate “rooms” for each game, which was useful if you had a large group of friends playing different games simultaneously. You could also customize your own server, creating funny nicknames for each user and decorating your “room” with images of your choice. The biggest advantage over Skype? Voice-activated microphones! This meant that background noise in your room wasn’t transmitted unless you spoke loudly enough. The only drawback? It wasn’t free. Renting your own server for a small group of up to ten people cost around $5 per month, which could be a dealbreaker for some, forcing them to put up with the noise in Skype.

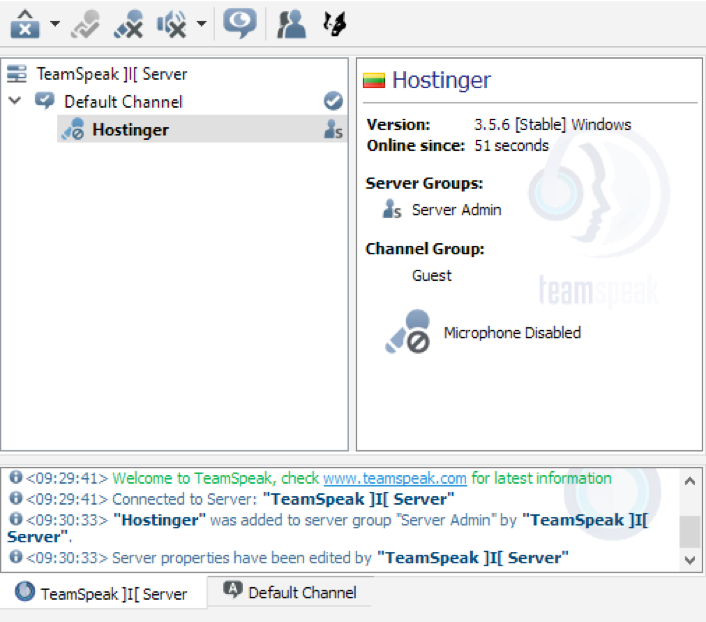

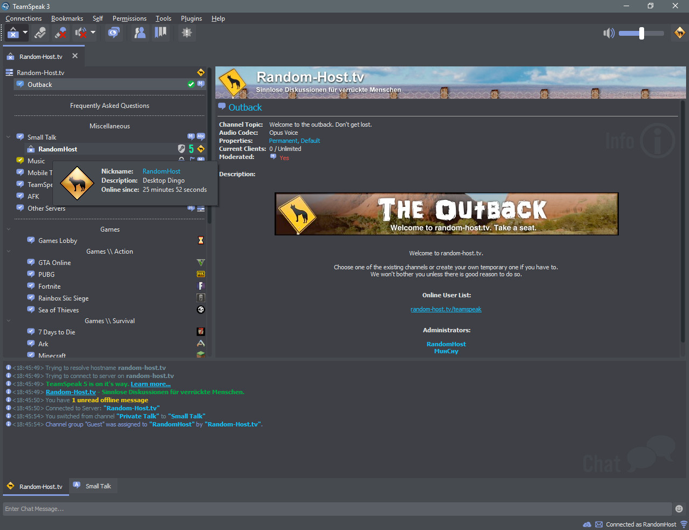

Examples of the classic TeamSpeak look (top) and customized (bottom)

The third and least private option was in-game voice chat. Almost all online games had a built-in function for player communication, and like TeamSpeak, you could activate your microphone with your voice. However, the downside was that if you and your two or three friends started chatting in the in-game voice chat, everyone else on the server – potentially dozens of players – could hear you. Moreover, an unwritten rule of gaming etiquette dictated that voice chat should only be used for discussing game-related matters to avoid excessive noise. This meant that if you were talking about your math homework, random players from Poland, Turkey, Sweden, or even the US might overhear your conversation. This lack of privacy and intimacy was a significant drawback.

Then, on May 13, 2015, an unknown program called Discord was launched —and it solved every single one of these problems. It was completely free, supported voice activation, allowed for both voice and video communication, let users create customized servers, and featured an intuitive and visually appealing interface. In fact, Discord’s developers cited the shortcomings of existing platforms as their main reason for creating the app.

Where is Discord now? The platform claims to have 150 million monthly active users and 19 million active servers per week. While estimates for TeamSpeak’s user base vary, the project’s team made a self-deprecating joke on Twitter in March 2023: “Yesterday, we reached 17 concurrent users ‼️??”. As for Skype, it reportedly still has 300 million monthly users, though it’s now used for different purposes. If you find someone still using Skype for gaming today, they are likely a devoted retro enthusiast.

In nearly a decade, Discord has evolved from a tool for in-game chat to something much bigger. During the COVID-19 pandemic, some educational institutions used it as a distance learning platform. For example, Ukraine’s New Ukrainian School published a guide during the first lockdown on how to adapt Discord for educational needs. Some organizations and celebrities also use Discord to engage with their communities — ranging from the investigative journalism group Bellingcat to singer Lana Del Rey. Even military units on the frontlines of the Russia-Ukraine war have used Discord for coordination.

However, before discussing that, let’s take a closer look at the darker sides of Discord and how its developers are working to address them.

The Dark Side of Discord

One of the most common issues on Discord is scamming. CyberArk, in its study of malware on the platform, linked its rise to the introduction of Discord Nitro in 2016 – a premium subscription service. While the core features remain free, Discord Nitro offers extras like high-quality streaming and larger file uploads. This attracted scammers, who started preying on users eager to get Nitro for free through fake “gift codes,” phishing links, and other common scams.

CyberArk found that 2,390 repositories containing Discord malware were publicly available on GitHub between 2016 and 2022. The study concludes that as Discord’s popularity grows, so will scams.

Another problem is cybercrime. Intel 471’s research on corporate security highlights Discord’s role in spreading malware and leaking sensitive data. Their advice? Companies should limit Discord use on work devices, monitor traffic, and educate employees about its risks.

There’s also hate speech, misinformation, and extremist content. A 2023 study by ISD examined two extremist religious communities – one Catholic, one Muslim – on Discord. Both spread anti-LGBTQ+ content, antisemitism, and calls for theocratic rule. However, Discord’s moderation was effective: the researchers’ fake accounts were quickly banned, and some extremist servers disappeared.

Moreover, some of the servers from the study’s sample had also disappeared from Discord by the time of analysis. However, the authors noted that this could be due to other reasons, not just platform moderation. Another, older ISD study from 2021 focused on far-right communities on the platform.

Another particularly interesting case, well known to Ukrainians, occurred in 2023 when U.S. serviceman Jack Teixeira decided to use Discord for leaking classified data. Drawing parallels to WikiLeaks, the incident was even dubbed “Discord Leaks” in the media. Over several months, Teixeira posted secret Pentagon documents on a server, including information about the Russia-Ukraine war, U.S. NATO allies, American intelligence operations, China, Iran, and North Korea. This November, Teixeira was sentenced to 15 years in prison.

One characteristic pattern of behavior on platforms with millions of users is the spread of disinformation. In February 2022, Discord itself acknowledged that health-related disinformation, primarily concerning COVID-19, had been circulating on the platform. However, for the company, this became a catalyst for changing its moderation policies, the details of which will be discussed in the next section.

How Does Discord Counter Misuse?

Discord is an example of good moderation practices among platforms related to gaming. The developers communicate openly about issues that may arise from misuse of the platform and actively work to prevent them.

In 2021, Discord acquired Sentropy, a company that develops AI tools to detect harmful content in online communities. At the same time, Discord expanded its team responsible for developing and implementing platform policies and, in 2020, began collaborating with the Global Internet Forum to Counter Terrorism.

Moreover, in the context of race-based discrimination, Discord’s co-founder and CEO, Jason Citron, explicitly stated that without changes to the platform’s policies, it would become “an accomplice to crimes.” Additionally, a European Commission report on the use of gaming platforms for youth radicalization recognized Discord as a platform with a proactive approach to content moderation, particularly due to its transparency reports, which the company publishes regularly – either quarterly or biannually.

Transparency reports are a good practice for highlighting key challenges faced by the platform and demonstrating how the company is addressing them. These reports outline major policy changes over a specific period, provide statistics on account bans across various categories, and describe relationships with governments of different countries.

The latest report (first half of 2024) highlights the importance of preventive moderation, which Discord refers to as “interventions.” The company can automatically detect harmful content on a personal profile or server without a user complaint and intervene by issuing warnings, restricting certain functions for violators (e.g., temporarily banning them from sending messages), or fully banning users. Using this system, Discord carried out 371,229 interventions on individual accounts and 831 on servers. The primary category, accounting for over 50% of interventions, is child safety on the platform—primarily related to the sexualization of minors (the platform explains the relevant policies in detail here).

During this period, Discord also banned 398,714 accounts (including those flagged by user complaints), with a similar category distribution. However, if spam is included, the total number increases significantly—over six months, the platform blocked 35,456,553 spam accounts. Additionally, Discord removed 42,018 servers, with the primary reason (36%) being illegal activities. This includes selling illegal goods such as weapons or drugs, distributing instructions for making homemade firearms or explosives, human trafficking, gambling, and several other violations—detailed in the “Regulated or Illegal Activities” section of Discord’s policies.

The report also provides information about partnerships with leaders in combating child exploitation and sexualization, responses to government requests, and copyright protection. However, it does not cover Discord’s relationship with Russia or the platform’s use during the Russia-Ukraine war.

Discord, Russia, and the War

In early October of this year, reports emerged that Russia’s internet watchdog, Roskomnadzor, had blocked Discord in Russia. The reason cited was “violations of the law, including the use of the messenger for extremist and terrorist purposes, recruiting citizens for such activities, and drug trafficking.”

A week before the ban, Russia’s FSB reported the arrest of 39 “radicals” who allegedly had “handlers on Discord.” Predictably, these “handlers” were described as “Ukrainian.” The arrested individuals were accused of nearly every possible crime – supporting “banned Ukrainian terrorist organizations,” “inciting violence against government officials, classmates, and teachers,” and, in some cases, preparing armed attacks on public places. Earlier, a Russian court fined Discord 3.5 million rubles for ignoring demands to remove “prohibited content” from the platform.

The climax came on October 8, when Discord was officially blocked. This decision outraged Russia’s nationalist blogger community, who called it a blow that “even the U.S. and Ukraine couldn’t inflict,” suggested awarding Roskomnadzor the title of “Hero of Ukraine,” and described the situation as “a contest of absurd laws.”

The outrage stemmed from the fact that the Russian military was using Discord as a platform for coordinating combat operations. The primary use case involved live-streaming drone footage to command observation posts and adjusting artillery fire accordingly. After the ban, Andrey Kartapolov, chairman of the Russian State Duma’s Defense Committee, stated that Discord was “not used” for communication among Russian forces – a claim met with skepticism by pro-war bloggers. This skepticism is understandable, given the numerous confirmations of Discord’s use on the front lines, including images showing Russian drone operators’ command centers with Discord open on their screens.

An example of Discord being used by the Russian army on the front line—the program is open on the television screen to the right. Source: Telegram channel “Военный Осведомитель” (Military Informant).

Moreover, the Russian army is not the only one using Discord for military purposes. The Ukrainian Armed Forces do not hide this fact either. For example, Militarnyi published a report on how the platform is used in various military units. Additionally, The New York Times released an article about the battle for Bakhmut, where the main image from a Ukrainian command post clearly shows the Discord interface.

Overall, the choice of Discord is understandable. All the advantages mentioned earlier apply here: it is convenient, fast, free, allows large groups of people to communicate in a single “room,” and offers a wide range of tools to protect servers from cyber threats. Moreover, this is far from the first instance of civilian technologies being adapted for communication during the full-scale invasion – military personnel also use Google Meet and Zoom.

What Should Be Done?

Since the Russian government effectively shot itself in the foot by blocking Discord, let’s focus on the platform’s other areas of use. Over nearly a decade, Discord has become one of the largest platforms within and beyond the gaming ecosystem—this is a fact.

Another fact is the proactive approach of Discord’s team to improving content moderation. This is evident in both the founders’ stance and the company’s actions—collaborations with experts and the publication of transparency reports. This fundamentally distinguishes Discord from platforms like Steam. While both platforms serve different purposes, they share similar issues. However, Discord acknowledges its challenges and attempts to create solutions, whereas Steam often ignores even the most glaring problems (for more details on content moderation in Steam, see our article “Russian Propaganda in Gaming Environments: User-Generated Content in Steam”).

So, what else can Discord do? In spring 2023, Microsoft President Brad Smith pointed out that Russian intelligence agencies were using gaming communities as a channel for spreading disinformation. At that time, Discord did not respond to these claims—neither by denying them nor by pledging to investigate. The word “Russia” appears only once on Discord’s official blog and is not used in a political context, while “Ukraine” is not mentioned at all.

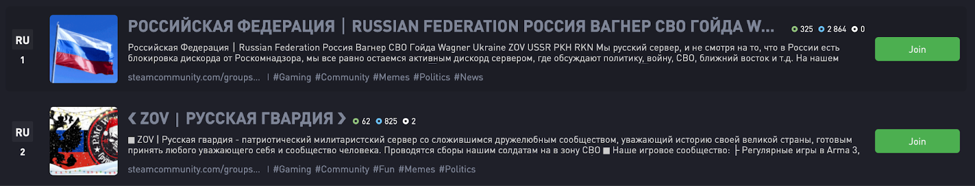

Moreover, it is relatively easy to find Russian communities on Discord that refer to Ukraine using derogatory terms, adopt invasion-related symbolism, or even organize fundraisers for the Russian army. According to the DiscordServer service, some Russian communities have not seen a significant decline in user activity. This suggests that despite the official ban, Russians continue to access the platform via VPNs, allowing them to spread propaganda and maintain Discord’s use within the military.

Examples of Russian communities in Discord

The presence of Russian propaganda content on Discord may stem from the company’s apparent lack of automated detection for such content in Russian. The key step Discord could take in this context is training its language models to automatically detect such content. To achieve this, Discord could collaborate with the Ukrainian gaming community and experts specializing in social media regulation and content moderation.