Deepfakes are becoming an increasing threat to democratic processes globally, raising concerns about their impact on elections and public trust. Various countries are developing their strategies to address this problem, combining legislative measures, technological innovations, and educational programs. In this analysis by the Centre for Democracy and the Rule of Law, we will examine these legislative steps in more detail and analyze their effectiveness.

In recent years, artificial intelligence (AI) technologies have increasingly facilitated faster processes and provided numerous opportunities to improve our daily lives. However, malicious actors have also taken advantage of these technologies for manipulation. Recently, a deepfake impersonating Ukraine’s former Foreign Minister Dmytro Kuleba was used in a conversation with U.S. Foreign Relations Committee Chair Benjamin Cardin. The deepfake raised questions related to upcoming elections, but Cardin detected the deception in time and ended the conversation. This incident highlights how deepfakes can be used for high-level manipulation and underscores the need for stringent regulation and security measures to prevent such occurrences.

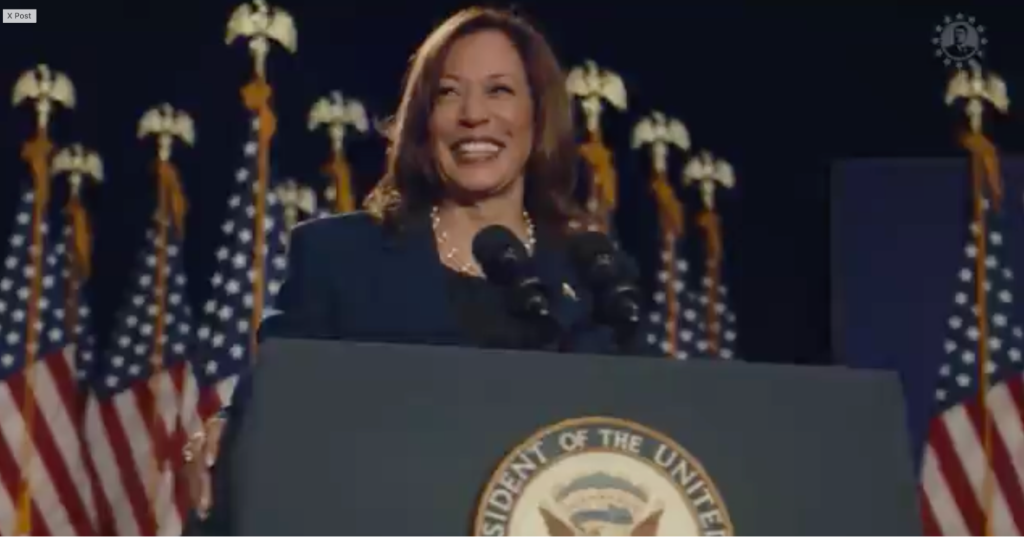

Elon Musk also recently posted a video created with DeepFake technology featuring Kamala Harris. In the video, she sarcastically calls herself “the best diversity hire”, accuses critics of sexism and racism, and claims she was a “puppet” for four years. Musk reposted the video from the account “MR Rican USA”, where the video was marked as a parody, complying with platform X’s requirements. However, Musk’s post did not include the original disclaimer, instead only adding his comment, “This is amazing”, along with an emoji. Platform X’s rules state that “Users may not share synthetic, manipulated, or out-of-context media that could mislead or confuse people and cause harm (‘misleading media’)”.

Source: Elon Musk’s X platform account

This case demonstrates the dangers of DeepFake technology, as many viewers may perceive such videos as genuine, even if labeled as parodies. Inadequate labeling or the absence of warnings can mislead audiences and manipulate public opinion, which is particularly dangerous in political and societal contexts.

A recent incident also involved representatives from the aggressor state using a deepfake of Oleksiy Danilov, allegedly commenting on a terrorist attack on their territory.

Naturally, many people are wondering what governments are doing to reduce the spread of such content. To answer this, we can examine legislative actions across various countries.

In the United States, efforts to combat deepfakes are being made at both the federal and state levels. For example, California passed AB-730 (2019), which prohibits the distribution of fabricated audio or video about candidates within 60 days of an election without clear disclosure. This law aims to protect voters from manipulation and ensure a fair electoral process.

Texas passed a similar law, SB-751 (2019), which bans the deliberate creation and distribution of deepfakes intended to influence elections.

A more recent initiative, the Nurture Originals, Foster Art, and Keep Entertainment Safe Act of 2023 (No Fakes Act), aims to prevent the creation of digital copies without the consent of the person or rights holder.

The No Fakes Act does not apply to digital copies used as part of news reports, public debates, sports broadcasts, or documentary/biographical works. Additionally, the bill provides exceptions for commercial activities related to news, documentaries, or parodies. Creative expressions such as satire, parody, and criticism are also exempt from the ban. Many content creators have expressed support for this bill.

Laws such as Texas’s SB-751 and the No Fakes Act 2023 indicate a growing awareness of the dangers of deepfakes, especially regarding elections and rights to digital copies. The No Fakes Act aims to protect intellectual property and personal data by imposing clear restrictions on creating digital copies without consent. However, the bill maintains a balance between protecting rights and preserving creative freedom by exempting news, documentaries, and artistic works.

Recently, California has also begun actively working on legislation to regulate artificial intelligence. A recent bill requires companies to test their models, prevent human manipulation, and disclose safety protocols. It targets systems that require over $100 million for training. Proponents believe this bill could establish necessary rules for the safe use of AI. However, companies like OpenAI, Google, and Meta have raised objections. This demonstrates strong resistance from major tech companies, although not all. For instance, Anthropic supported the bill after certain amendments were made. After a lengthy process, California Governor Gavin Newsom vetoed the bill, citing concerns that it could hinder innovation and prompt companies to leave California. Newsom explained that the bill did not account for risk levels for different AI systems and could impose excessive restrictions on basic functions. Nevertheless, he announced plans to protect the public from potential AI risks.

The bill could have had a significant impact on the global tech industry, as California is home to many leading AI companies, such as OpenAI. Initiatives like this highlight the complexity of balancing innovation and safety. While the bill aimed to set rules for large AI systems and ensure their safe use, it faced strong opposition from tech giants. Newsom’s veto reflects concerns about the impact of such regulations on California’s innovation and economy. At the same time, it underscores the need to continue seeking a compromise between regulation and technological development.

European Union

In the European Union, the main regulation governing AI systems is the Artificial Intelligence Act (AI Act). According to the Act, deepfakes are defined as “images, audio, or video content created or manipulated by AI that resemble existing persons, objects, places, organizations, or events and are misleadingly perceived as authentic”.

The AI Act sets new rules for deepfakes, requiring AI providers to label such content in a machine-readable format to facilitate identification. Users who distribute deepfakes must also clearly indicate their artificial origin, with exceptions for artistic, satirical, or legal works. These requirements aim to reduce misinformation risks, ensure transparency, and protect citizens from deception.

AI providers must implement reliable technical solutions — such as watermarks, metadata, or cryptographic methods — to help end users easily distinguish deepfakes from authentic content. These solutions should be effective without imposing excessive costs on providers. Users (both corporations and individuals) must also clearly label AI-generated content. Exceptions apply when the content is used for criminal or satirical purposes or as part of artistic works.

The AI Act requires organizations to develop codes of conduct to comply with the new rules. The European Commission may approve these codes or establish general rules for their implementation. These measures aim to combat misinformation and maintain trust in the EU’s information environment.

On January 10, 2023, China’s Regulations on the Administration of Deep Synthesis of Internet Information Services came into effect. These regulations require deepfake service providers to verify users’ identities and indicate that content was AI-generated to avoid public confusion.

Singapore enacted the Protection from Online Falsehoods and Manipulation Act (POFMA) in 2019, granting the government broad powers to demand the removal or correction of false content, including deepfakes. While POFMA does not specifically define deepfakes, it can apply to any content deemed “false” or “misleading”, including AI-manipulated videos and images. Though aimed at combating disinformation, the law has faced criticism from human rights organizations, such as Human Rights Watch, for allegedly suppressing free speech.

Singapore’s approach demonstrates how governments can use legislation to respond to deepfake threats quickly. However, it is essential to ensure these measures do not become tools to suppress legitimate discourse and criticism.

United Arab Emirates

In the UAE, deepfakes may fall under legislation prohibiting the processing of personal data without explicit consent, namely Federal Law No. 45 of 2021 on the Protection of Personal Data (PDPL). This law does not cover government entities or sectors like healthcare and banking, which are regulated separately. The UAE also has cybercrime laws that penalize the dissemination or modification of personal information with the intent to defame or insult.

The UAE’s legislative framework, including Federal Law No. 45 of 2021 (PDPL), establishes clear guidelines for the protection of personal data, which can be effectively applied in cases involving deepfakes. For instance, the aforementioned law, although it does not cover government institutions and certain sectors such as healthcare or banking, still provides a powerful tool for regulating the processing of personal data and safeguarding citizens’ privacy.

Additionally, cybersecurity legislation aimed at combating defamation and insults enhances legal options for countering the spread of deepfakes, particularly in cases involving the manipulation of personal information to harm someone’s reputation. This multi-layered approach to data protection and cybercrime prevention can serve as a potential mechanism for regulating and combating deepfakes.

South Korea

In recent years, the number of sex crimes involving deepfakes has increased significantly in South Korea. The police have already received over 800 such cases. Additionally, local authorities are actively investigating the activities of Telegram bots linked to the dissemination of fake materials. This effort was driven by 88 cases of distributing pornographic deepfakes via Telegram.

This rise in incidents led to the adoption of a new bill addressing criminal liability for viewing and storing images with elements of sexual violence, as well as videos created with artificial intelligence. Previously, such actions were punishable by up to five years of imprisonment or a fine of up to 50 million won under South Korea’s Act on the Prevention of Sexual Violence and Protection of Victims. However, under the new legislation, the prison term can reach up to seven years, indicating a tougher stance on such crimes.

Given the increase in crimes related to deepfakes and the intensified efforts of law enforcement to investigate them, it is clear that combating this phenomenon requires a more radical approach. The adoption of new legislation with stricter penalties for such offenses is a necessary step in the fight against deepfakes. However, effective enforcement not only demands stricter accountability but also ensures the effective operation of law enforcement in cyberspace.

Special attention should be paid to platforms through which deepfakes are distributed. Combatting these platforms should include both regulatory measures and technical solutions that limit the dissemination of harmful content.

Recommendations for Civil Society:

- Increasing Media Literacy: Conduct educational campaigns for citizens on recognizing deepfakes and other forms of disinformation.

- Critical Thinking: Promote the development of critical analysis skills for evaluating information distributed through media and social networks.

- Monitoring and Countering: Launch initiatives for active monitoring of fake news and debunking it through independent platforms and media outlets.

Recommendations for the State:

- Legislative Regulation: Implement laws to restrict the creation and distribution of deepfakes for manipulative purposes.

- Awareness Campaigns: Organize government campaigns to raise awareness about deepfakes and teach citizens how to verify information sources.

- Collaboration with the Private Sector: Work with technology companies to develop transparency standards and labeling for AI-generated content.